18/03/2023

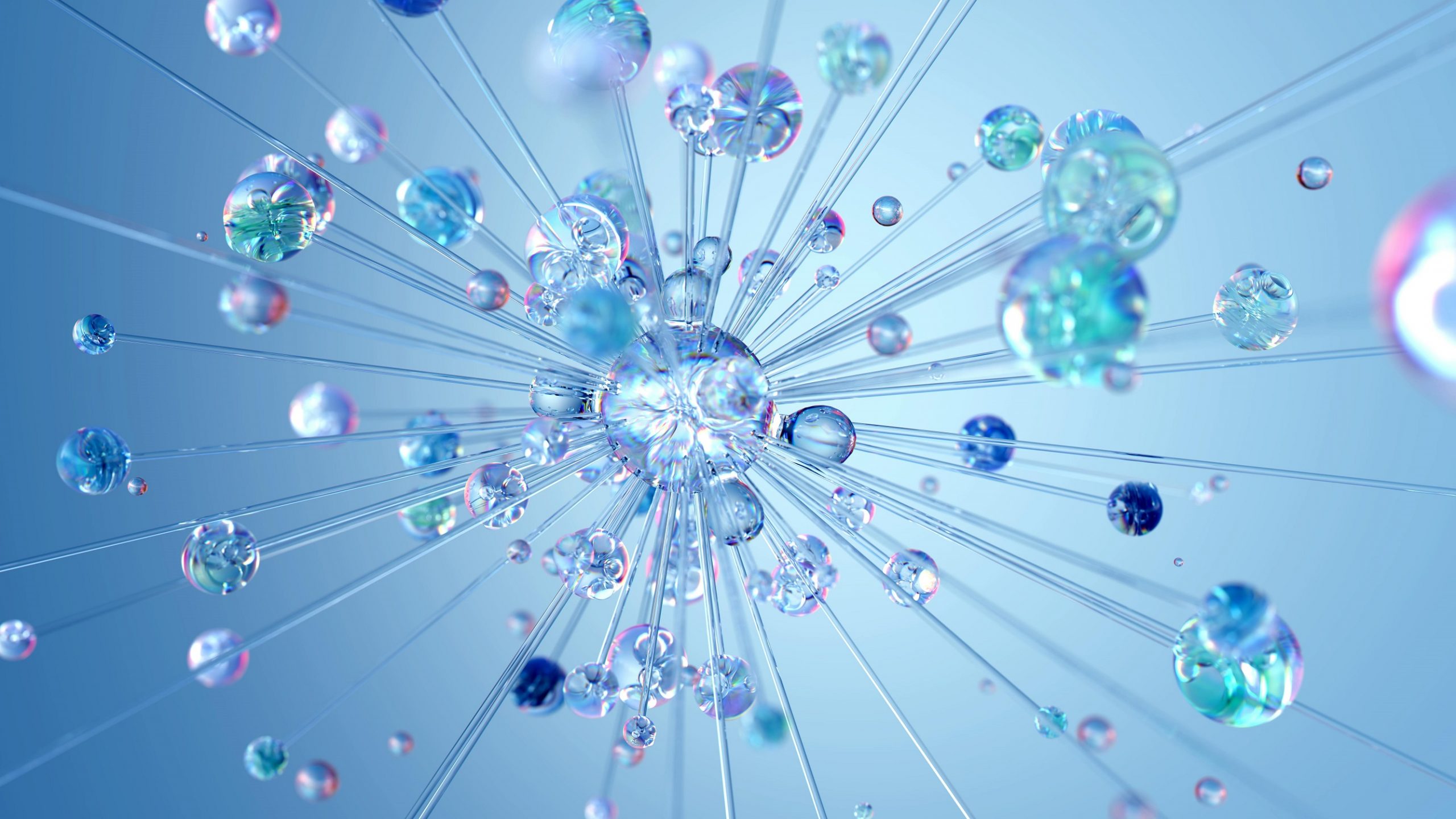

We explain what artificial intelligence is and how it is currently used, a few of its functions and how BERT is helping AI.

You’ve surely thought at some time or another how useful it would be to have the J.A.R.V.I.S. assistant from Iron Man or K.I.T.T. – that fantastic car that helped stop so many bad guys thanks to the detailed information it provided at just the right moment – in your life. Her, the replicants from Blade Runner, Hal from Space Odyssey…, artificial intelligence seen in films has little to do with real interaction with the machines we are used to today.

It is fair to say that what appears in films in reality matches the so-called “super intelligence” concept and not the current state of play in app and web development. It’s also true that although the promises in science fiction are far from becoming everyday realities, artificial intelligence is already a common working element for the most innovative businesses.

How do we use AI at CaixaBank Tech?

We have been working with artificial intelligence at the CaixaBank Group for a long time. In fact, we were on the team that taught Watson to speak Spanish at the beginning of last decade. Since then, we have developed many AI-based apps, some aimed at helping employees in their daily tasks and others focused on improving customer service and assistance, such as our CaixaBankNow virtual assistant.

We have called this type of app 100% thinking apps, as we designed them with a view to their being able to “reason” akin to human beings or J.A.R.V.I.S. – answering back just like in the movies. What would it say to users? What interactive elements would it offer? How would it accompany the information it provides us with?

Conversational interfaces with AI

Conversational interfaces with voice and text are the backbones of these apps, alongside other graphic elements such as buttons, forms or lists that should enable the simplest and most visual interaction possible. Predictive text or highlighted responses are other elements that should also accompany interaction. In this last case, for example, we are exploring Q&A models with BERT, using Tensorflow.js.

Due to this conversational interaction, understanding natural language through, for example, intent classification algorithms, entity recognition, semantic disambiguation or graph databases to represent areas of knowledge and their relations is essential.

In this way, we humanise AI thanks to algorithms such as BERT

These new applications require dynamism and humanisation in their explanations, meaning it is necessary to use natural language generation technology in order to be able to express information naturally. To do this, we use Deep Learning algorithms, such as GPT-2/3 or BERT.

Another fundamental feature of this kind of application is content customisation. Just as human beings know how to adapt to the person they are interacting with, adjusting both the content explained and the format used to the speaker’s profile, these apps also need to be able to understand what kind of profile they are addressing and customise the information they provide, always in line with user privacy criteria.

Other functions performed by Artificial Intelligence

In the same vein, they have to be able to recommend and be proactive, even anticipating possible user needs. To do this, being able to generate a user context through embedding, i.e. latent representations of the application world that preserve the main features of users, and their associations and relations, is vital to fuel the advanced recommendation algorithms to be used.

Thinking apps must also be able to know how to process images and videos, as well as voice, either through image processing techniques to classify and extract relevant information, or generate images based on an input data series. Along these lines, for instance, we are also working with graph neural networks and studying DALL-And advances.

Another fundamental feature of these apps is adaptability to the environment and, therefore, an omnichannel approach. The app also adapts to user mobility and available devices, transferring information from one channel to another transparently. For example, mobile phones are particularly useful for scanning information with their camera and using it through AI algorithms. In turn, PCs or even TV screens can be much more useful for reviewing graphic details and analysing data.

Thinking apps must also benefit from the use of biometric technology to support authentication tasks and transparently improve app security for users thanks to, for example, behavioural biometrics.

AI architecture

Event architectures, microservice orchestration, continuous monitoring through anomaly detection algorithms based on autoencoders and topic modelling, MLOps….The infrastructure and technology behind this new app paradigm are not simple and are constantly evolving, with many technological challenges to be overcome. Nonetheless, through a step by step process and by working nimbly to be able to validate this new way of interacting with our end users via MVPs, we are approaching this new 100% thinking app concept to enable us to offer experiences that start to recall those “super assistants” in films.

tags:

share: