03/04/2023

We discover how Artificial Intelligence helps solve testing problems and what AI tools to use to perform our processes.

At CaixaBank Tech, we keep a particularly close eye on the advances in artificial intelligence. One of the latest trends is that AI is starting to play a fundamental role in the software development lifecycle, and especially in the software testing phase.

Testing: Instability in test suits

The instability of test suits when dealing with software updates and the difficulty in discerning which test suits to run for which code changes are some of the problems of traditional testing. These problems not only they make test maintenance difficult, but also slow down the testing phase and, in turn, the time to market, because large subsets of tests end up being run to test small changes in the code.

Testing solutions with AI

To combat these problems, testing solutions are currently being developed that use artificial intelligence to help developers to focus on other tasks like increasing code coverage, allowing them to develop higher quality software products with fewer resources and shorter release times.

Some of the AI-based technologies that allow for improvements in the testing process are the following:

1. Self-healing tools with artificial intelligence

Self-healing is a technology based on automatic learning that allows test suits to automatically repair when there are changes in the code of the graphic interfaces of our applications or web pages due to updates in their components.

The emergence of this technology is motivated by the excessive modifications of the test batteries required by traditional testing tools for small modifications or updates to our code. These tools cause the locators that identify each of the web elements without our test suits to break and the bests to become invalid. This results in a lot of wasted resources in terms of time and people in each update and in the maintenance of the tests, which is neither optimal nor in many cases acceptable. Testing frameworks based on self-healing (like Healenium) are able to identify changes in the page or interface and, through machine learning algorithms, automatically repair broken locator paths in our test suits, minimising maintenance times, improving the stability of automated tests and allowing for greater adaptability of tests to changes in the application and environment.

2. AI-based visual testing

Another of the techniques that is being introduced currently is the visual testing based on computer vision algorithms. But what is visual testing? Why is this type of testing necessary and what role does AI play?

Imagine that we want to test a website with 21 visual elements and we want to validate characteristics like visibility, height, width and background colour for each of them. If we need a code assertion to test each of these, we will need 84 code assertions. So far, so good… but now we wonder: On which browser will the site be displayed? On which operating system will the browser be run? What is the size of the screen on which it will be displayed? All these combinations could result in thousands of lines of code and any one of them could be susceptible to change with each new version of the site, making test maintenance unfeasible. This is why we need another approach to testing besides functional testing: visual testing.

Visual testing captures the visual part of a website or graphic interface of an application and compares it with the expected results by design. In other words, it helps to detect “visual bugs” on the page other than strictly functional bugs. Visual errors occur more frequently that we might first think, and while functional tests check the functional performance, they are not designed to do so optimally with website rendering. This is the reason why we need visual testing to detect visual errors. Visual testing can be carried out mainly in two ways: manually or through automated tests.

Manual visual testing

It consists of a group of people carrying out tests by manually inspecting pairs of screenshots to find the differences. These differences are very difficult to detect in many case and when the number of combinations to test grows and there are multiple pages to test, this approach is unfeasible. In an attempt to solve this problem, automated visual tests have emerged.

Automated visual testing

This type of tests, which emerged in an attempt to imitate automated functional tests, try to verify the visual appearance of an entire page rather than the properties of each visual element, with a single code assertion. These tests are based on capturing a bitmap of a screen at various points and comparing the hexadecimal code pixel by pixel with a bitmap used as a baseline through an iterative process. If the code is different an error is generated.

Unlike humans, they are able to detect difference quickly and consistently, making maintaining tests suits easier. However, far from being perfect, these algorithms present the so-called “snapshot test problem”. Since pixels are not visual elements, font smoothing algorithms or image resizing can generate differences at the pixel level, leading to false positive test results. Not to mention when instead of static content we also have dynamic content (news, ads, etc.). To solve this problem, AI-driven visual testing tools have been developed in recent years.

Automated visual testing through AI

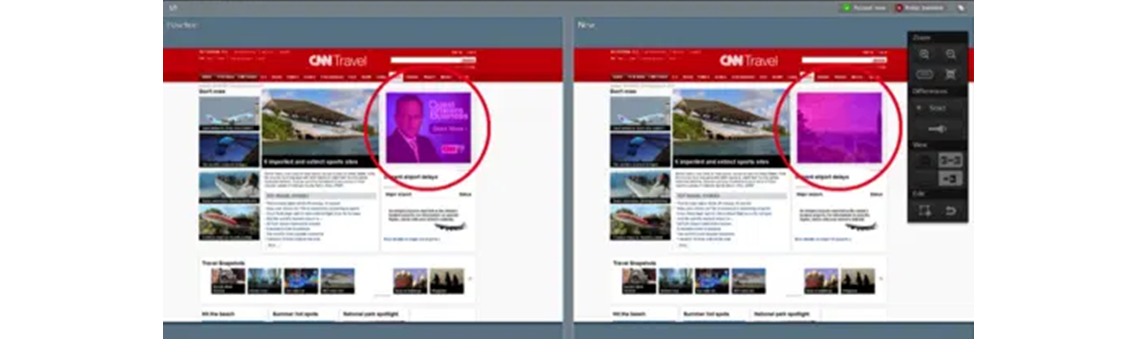

AI-driven visual testing allows us, through artificial vision algorithms, to detect and report differences between the final rendering and the intended visualisation to help focus more exhaustive testing on elements that present differences. In this case, they also take page snapshots as they run their functional tests, but instead of comparing them pixel by pixel, they use algorithms to determine when errors have occurred. Since the comparison is based on relationships (existence of content, relative locations) and not pixels, they do not need to work in static environments to guarantee a high level of precision. An example of dynamic content could be the one in Figure 2, where we have a newspaper website with a section of dynamic content (news) that changes over time.

AI-based bots

Finally, the last technology that we are going to present is testing using AI-assisted bots. As the number of lines of code in applications grows, the test suit to be run to cover the code coverage also increases, as does the difficulty in knowing which tests to run for which code changes. This often entails running large test suits or even the entire test suit to ensure small modifications. In other cases, it is decided not to test all affected scenarios, with the possible resulting consequences, given the impossibility of establishing criteria to be followed to define which executions to perform in each case. To solve this problem, AI-based bots and NLP algorithms are currently being developed that are able to check the current status of the tests, recent changes in the code, the coverage and other metrics, and subsequently decide which tests need to be run. This not only reduces errors in production uploads and increases efficiency in test automation, but also reduces time to market.

Despite the constant progress being made with AI in the world of software testing, the technologies we have presented are still in their infancy, and we are still far from being able to dispense with manual testing, which is still essential. We must not forget that there are still many environments where human contextualisation is necessary to ensure a quality product. These technologies must always be a support and not a replacement for testing teams. To quote Raj Subramanian, FedEx executive: “Rather than AI solutions replacing QA teams, let’s increase the coverage of software testing with AI”.

tags:

share: